The Evaluate tab in Freddy AI is your go-to tool for testing and improving your AI agent's responses. Whether you're preparing for deployment or fine-tuning performance, this feature allows admins to simulate real-world scenarios, review bot behavior, and refine responses to ensure your AI agent meets customer expectations.

Note: This is currently available for customers in the US, EU, AU and IND regions and will soon be released in phases in other regions.

Begin your first evaluation

To evaluate and optimize your Freddy AI Agent, follow these key steps designed to test, refine, and improve its responses for better customer support.

Add queries

Run Queries

Review results

Add Queries

Navigate to AI Agent Studio on the left navigation bar > On the AI Agent Studio page, click on the AI agent with the desired knowledge sources already added for evaluation.

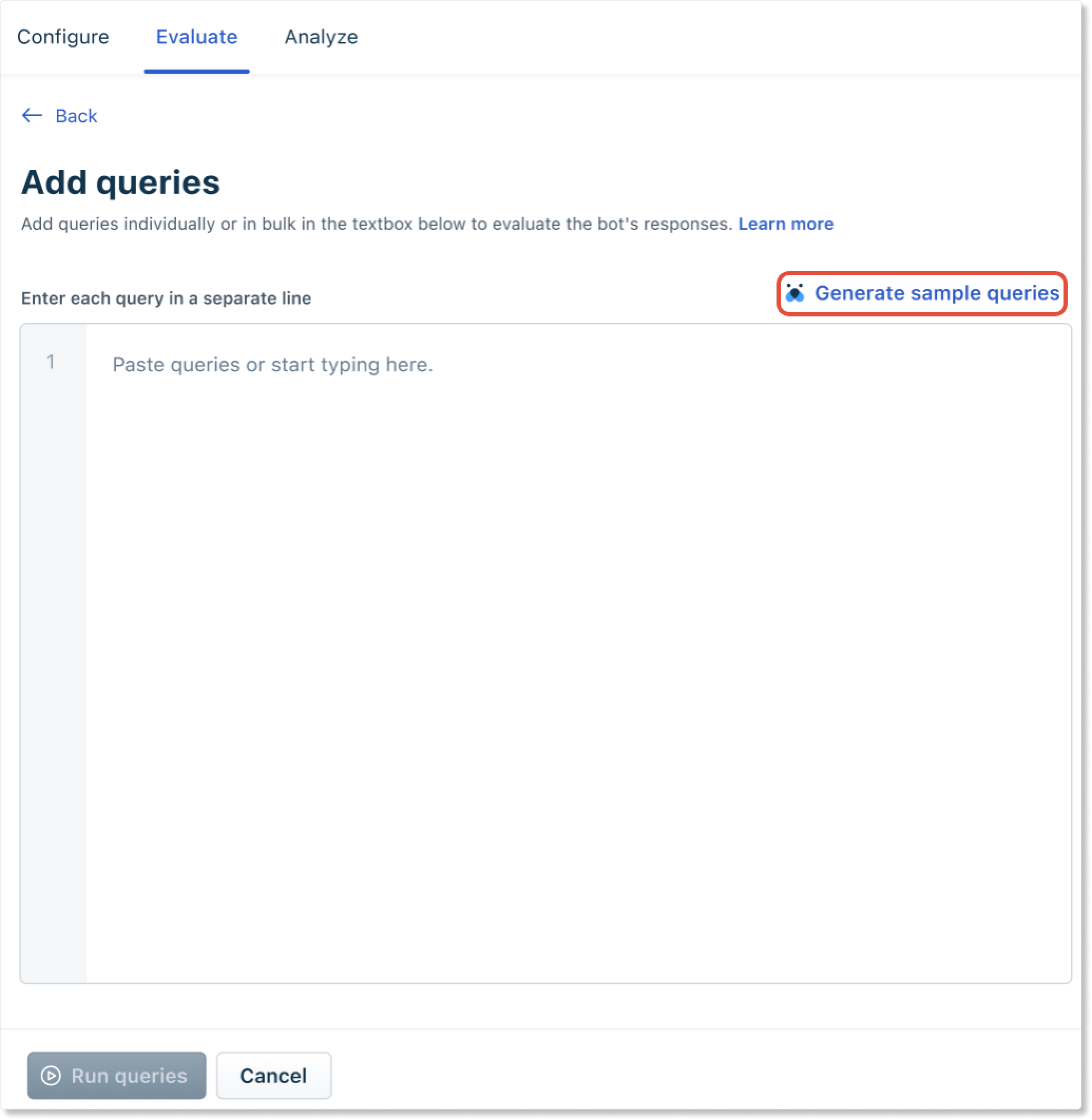

Click Evaluate > +Add queries.

In the text box, type or bulk copy-paste your queries.

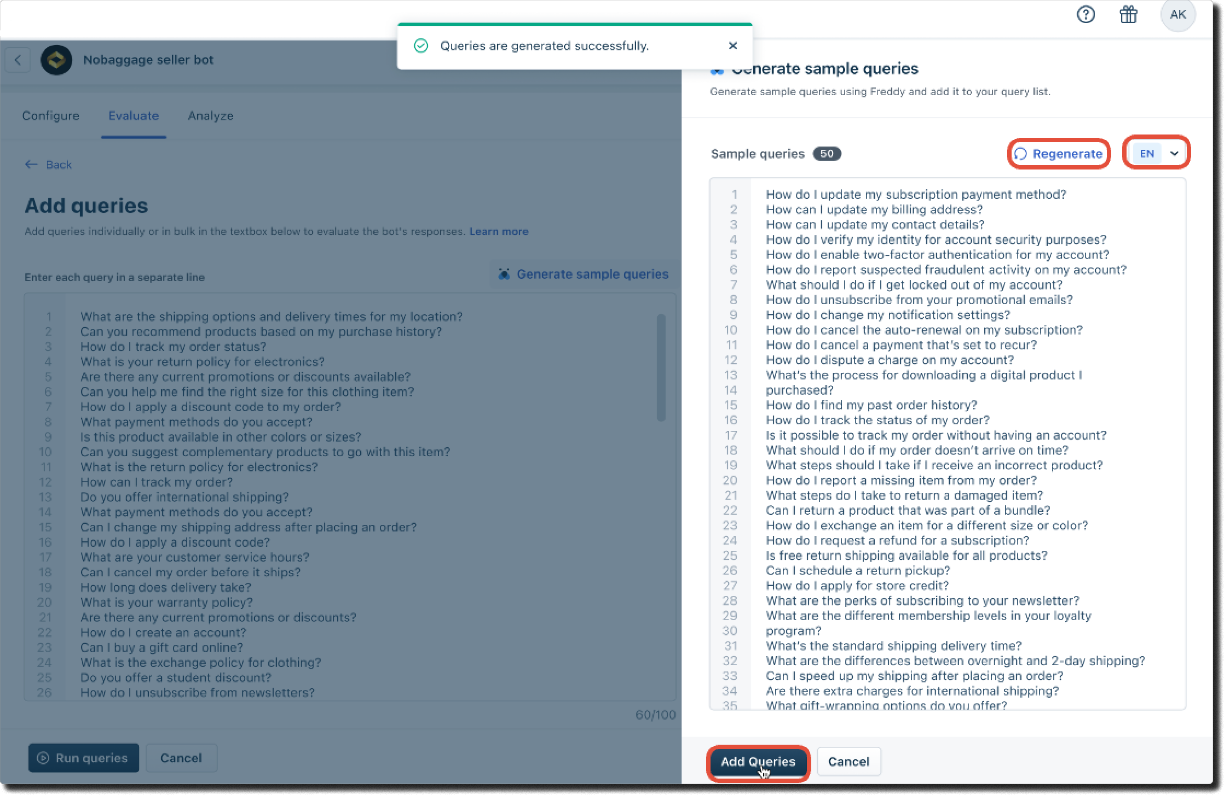

Note: Enter each query in a separate line. You can add up to 100 test queries to be executed in a single run.Alternatively, click Generate Sample Queries to let Freddy AI create a list of 50 sample queries based on the knowledge sources configured for the AI agent.

Note: When no Knowledge sources are added to the AI Agent, Freddy cannot generate the simple query list and will throw the error: Please add Knowledge Sources to generate queries.

Review the generated queries, edit them if needed, or click Regenerate for a new set.

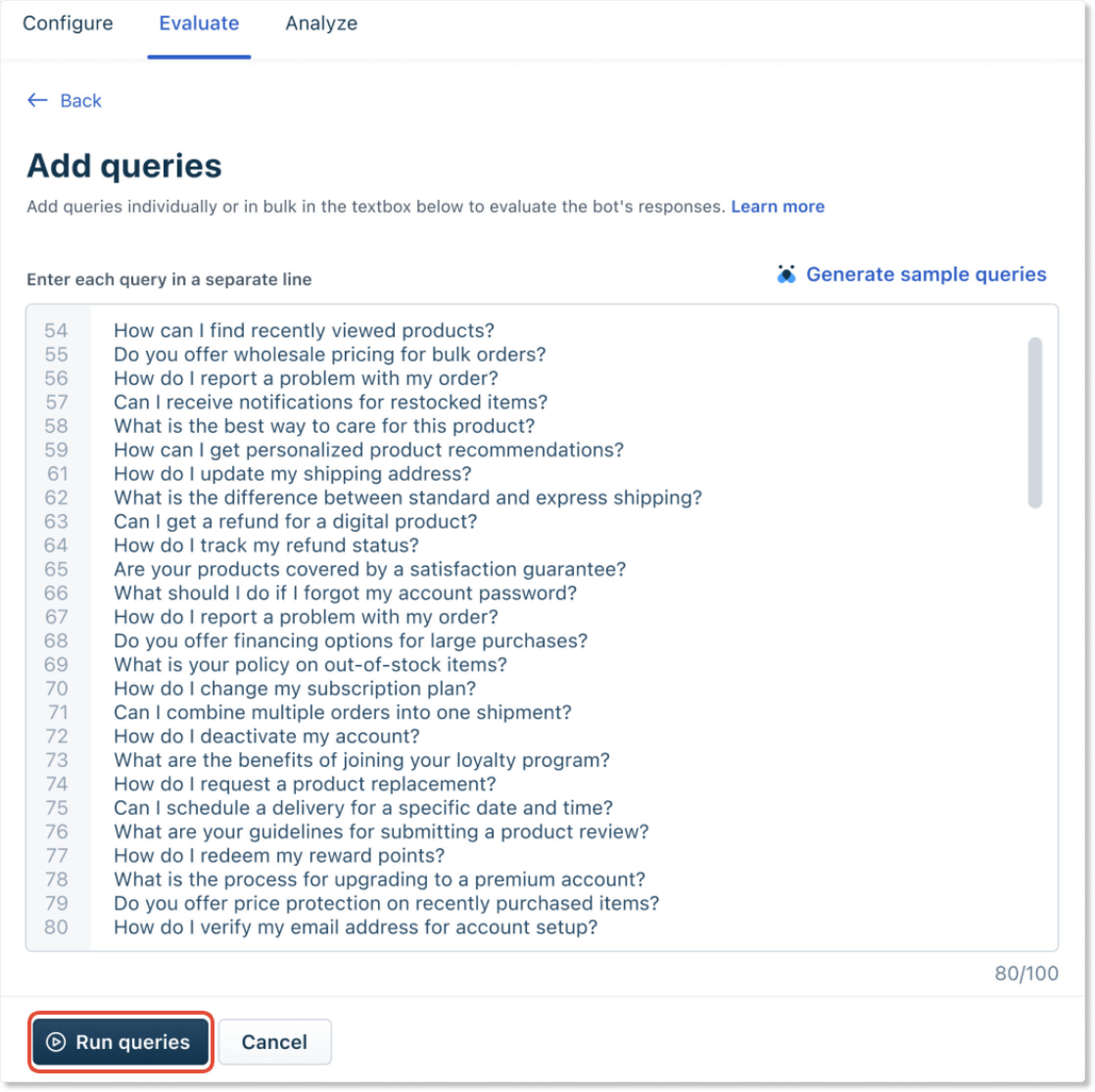

Click Add Queriesto include them in your query list.

Note: If the total number of queries exceeds 100, you’ll see the error: Query limit exceeded; please remove the additional queries to proceed.You can also choose to translate the generated queries in your preferred languages by selecting the language code from the Language dropdown.

Run Queries

Click Run queries to evaluate your AI agent’s performance.

The evaluation process may take a few minutes. Once complete, you’ll receive an email notification and an in-app alert.

You can cancel the run at any time by clicking Cancel Run.

If errors occur during the query run or if execution is canceled, the Admin's email address will receive a notification.

Review results

Once the evaluation is complete, the results are displayed in four sections:

Summary

Query Details

Actions

Filters and Additional Details

Additionally, the results are sent to the email ID of the admin who executed the test, ensuring easy access and record-keeping. Admins can also download older test results directly from their emails for the future.

Section 1: Summary

Answered: Number of queries the AI agent successfully answered.

Unanswered: Number of queries the AI agent couldn’t answer.

User Rating: Number of thumbs up (positive) and thumbs down (negative) ratings for the responses.

Section 2: Query Details

A table displaying:

Queries: The list of test queries.

Status: Whether the query was answered or unanswered.

Responses: The AI agent’s responses.

Answer Source: The knowledge source used for the response.

Rate Responses: Option to provide a thumbs up or thumbs down rating.

Note: Rating responses with Thumbs Up/Down does not train or improve the AI's capailities. Instead, these ratings serve as a personal reference for you to review conversations and share insights with your team.

Section 3: Actions

Export: Download the evaluation report as a PDF.

Manage Queries: Edit or remove queries from the list.

Rerun Queries: Re-evaluate the AI agent with the same or updated queries.

Section 4: Filters and Additional Options

Search Queries: Use the search bar to find specific queries.

Filter: Narrow down results by:

Response Type: All, Unanswered, or Answered.

Evaluation: All, Accepted, Rejected, or Not Evaluated.

Add QnA: For unanswered queries, click Add QnA and provide your answers here so the next time the AI agent is asked this question, it will get answered.

Retry in preview: You can select a query to retry in preview to evaluate how the AI agent answered the customer query in the preview mode.

Best Practices for Optimizing Your AI Agent

Test Diverse Scenarios: Include a mix of common, complex, and edge-case queries to ensure your AI agent is well-rounded.

Refine Knowledge Sources: Regularly update and expand your knowledge base to improve response accuracy.

Leverage User Feedback: Use thumbs up/down ratings to identify areas for improvement.

Iterate and Improve: Continuously evaluate and optimize your AI agent to keep up with evolving customer needs.